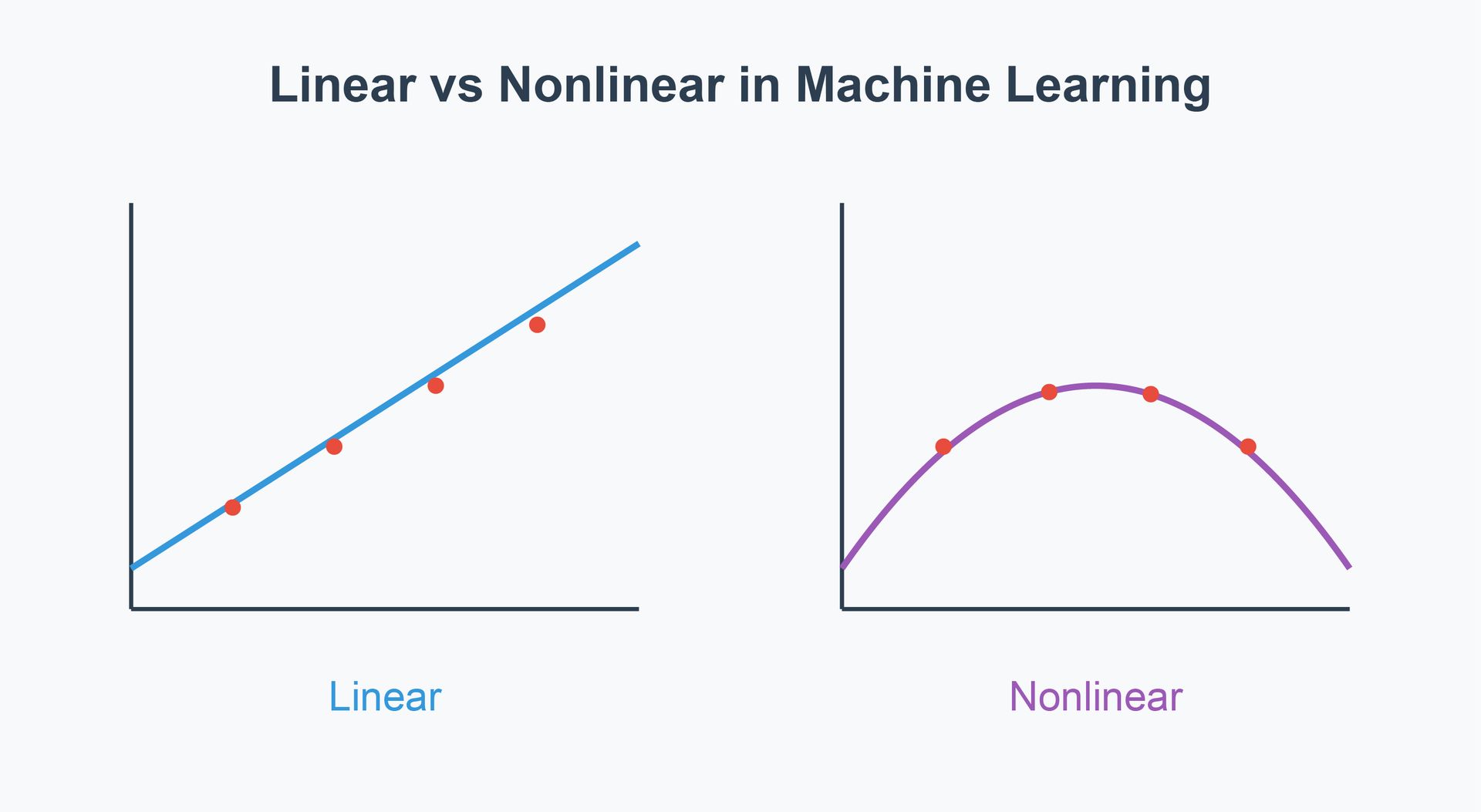

Machine learning is here to change the way we interact with technology. There are two main types of models, associated with the names linear and non-linear machine learning optimization, which operate within the realm of machine learning. However, to select an appropriate model for a given problem, to improve performance, as well as to increase predictive accuracy, it is well to know these differences.

Linear Models In Machine Learning

Linear models are one of the simplest models in machine learning and have a linear relationship between the input (X) and the output (Y). For instance, the linear regression model is the most common example, in which the main assumption is that the change in the output variable is directly proportional to the change of other (input) variables.

Key Characteristics of Linear Models:

1. Simplicity and Interpretability:

Linear models are easy and can be easily understood. As they require fewer parameters, they are less susceptible to overfitting on smaller datasets compared to more complex models.

2. Assumption of Linearity:

These models do not operate under this assumption because real-world data is not always linearly additive.

3. Performance with Linearly Separable Data:

Data is linearly separable, and therefore linear models are good at it. They are very much trainable quickly and it’s easy to understand their results.

4. Common Algorithms:

Other than linear regression, the linear algorithms include logistic regression, linear discriminant analysis, and support vector machines with the linear kernel.

Nonlinear Models In Machine Learning

Such datasets have relationships with complex scenarios beyond linear separability and hence non-linear machine learning optimization models are designed to deal with them. By being able to model curves and complex surfaces, they are more flexible and can fit datasets over which linear models will not make good predictions.

Key Characteristics of Nonlinear Models:

1. Complex Relationships:

Nonlinear models can capture complex patterns and relationships between input and output variables, and therefore, they tend to yield better predictions when the relationship between input and output variables is also complex.

2. Flexibility:

These models offer greater flexibility and can fit a wide variety of functions. This makes them suitable for tasks such as image and speech recognition, where linear equations cannot capture data.

3. Risk of Overfitting:

Due to their complexity, nonlinear models are more prone to overfitting, especially with small datasets. Overfitting occurs when a model captures noise along with the underlying pattern in the data.

4. Common Algorithms:

Nonlinear algorithms include decision trees, neural networks, and support vector machines with nonlinear kernels such as polynomial, radial basis function (RBF), and sigmoid kernels.

Choosing Between Linear and Nonlinear Models

Choosing between linear and non-linear machine learning optimization models depends on several factors, including the complexity of the data, the size of the dataset, and the specific problem at hand.

Complexity of Data:

If the data exhibits a simple pattern, linear models can suffice. However, for complex patterns, nonlinear models are more appropriate.

Size of the Dataset:

Linear models can be more effective with smaller datasets since they generalize well without overfitting. Nonlinear models require larger datasets to accurately discern the underlying relationships.

Computational Resources:

Linear models are less computationally intensive than nonlinear models, making them suitable for applications where processing power is limited.

Conclusion

In conclusion, both linear and nonlinear models hold significant importance in machine learning. While linear models offer simplicity and speed, non-linear machine learning optimization models provide flexibility and increased accuracy for complex tasks. Understanding their differences aids in selecting the right model to achieve optimal results, catering to the specific needs of the machine learning task at hand.